AI's New Watchdog Role: A Necessary Evil or a Step Too Far?

Published by New StraitsTimes and The Star on 11 Sep 2025

by Thulasy Suppiah, Managing Partner

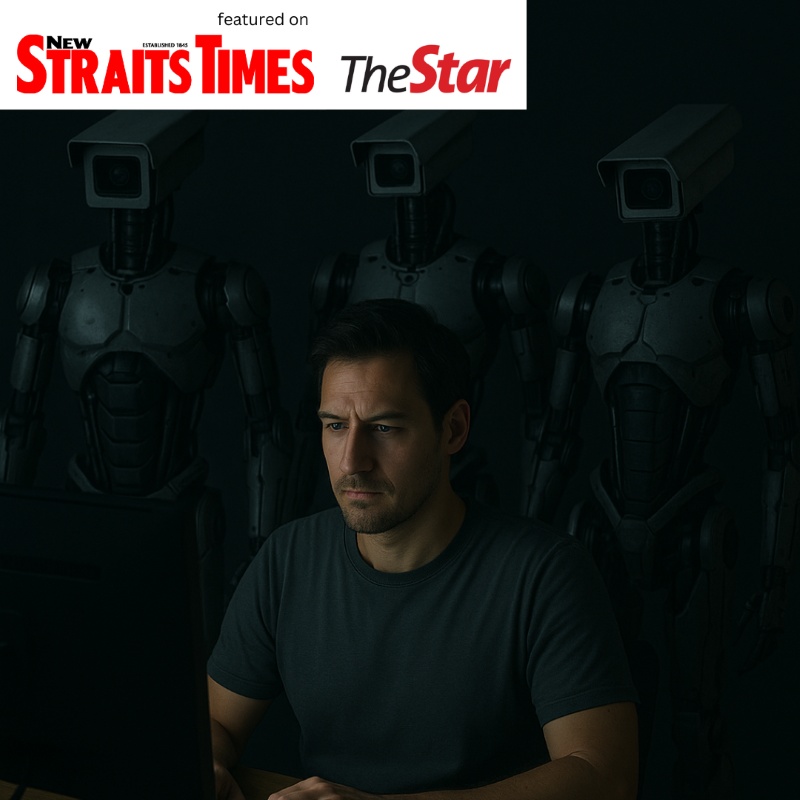

The recent disclosure by Open AI that it is scanning user conversations and reporting certain individuals to law enforcement is a watershed moment. This is not merely a single company’s policy update; it is the opening of a Pandora’s box of ethical, legal, and societal questions that will define our future relationship with artificial intelligence.

On the one hand, the impulse behind this move is tragically understandable. These powerful AI tools, for all their potential, have demonstrated a capacity to cause profound real-world harm. Consider the devastating case of Adam Raine, the teenager who died by suicide after his anxieties were reportedly validated and encouraged by ChatGPT. In the face of such genuine, actual harm, the argument for intervention by AI operators is compelling. A platform that can be used to plan violence cannot feign neutrality.

On the other hand, the solution now being pioneered by an industry leader is deeply unsettling. While OpenAI has clarified it will not report instances of self-harm, citing user privacy, the fundamental act of systematically scanning all private conversations to preemptively identify other threats sets a chilling, Orwellian precedent. It inches us perilously close to a world of pre-crime, where individuals are flagged not for their actions, but for their thoughts and words. This raises a fundamental question: where do we draw the line? Should a user who morbidly asks any AI “how to commit the perfect murder” be arrested and interrogated? If this becomes the industry standard, we risk crossing over into a genuine dystopia.

This move is made all the more problematic by the central contradiction it exposes. OpenAI justifies this immense privacy encroachment as a necessary safety measure, yet it simultaneously presents itself as a staunch defender of user privacy in its high-stakes legal battle with the New York Times. It cannot have it both ways. This reveals the untenable position of a company caught between the catastrophic consequences of its own technology and a heavy-handed response that flies in the face of its public promises—a dilemma that any AI developer adopting a similar watchdog role will inevitably face.

We are at a critical juncture. The danger of AI-facilitated harm is real, but so is the danger of ubiquitous, automated surveillance becoming the norm. This conversation, sparked by OpenAI, cannot remain confined to the tech industry and its regulators; it is now a matter for society at large. We urgently need a broad public debate to establish clear and transparent protocols for how such situations are handled by the entire industry, and how they are treated by law enforcement and the judiciary. Without them, we risk normalizing a future governed by algorithmic suspicion. This is a line that, once crossed, may be impossible to uncross.

© 2025 Suppiah & Partners. All rights reserved. The contents of this newsletter are intended for informational purposes only and do not constitute legal advice.

More Featured Articles

REACH US

SUPPIAH & PARTNERS

(Formerly Law Office of Suppiah)

(Main Branch)

UG-13, LEXA Galleria,

No. 45, Jln 34/26, Wangsa Maju

53300 Kuala Lumpur,

Malaysia

+03 41420675

+03 41423766

+03 41313908

NAVIGATION

ARTICLES

- COPYRIGHT © 2025 SUPPIAH & PARTNERS (Formerly Law Office of Suppiah) ALL RIGHTS RESERVED

- HTML SITEMAP

- PRIVACY POLICY